tl;dr: Choosing the right technology stack can mean the difference between SUCCESS and FAILURE in data science. It can also mean the difference between ‘I Feel Productive’ and ‘Everything I try takes so much time’. When deciding which toolkit / stack be sure to learn from others, consider implementation partners, and always remain cautious.

Note: In addition to this post, I am surveying people to determine which data science technology stacks are being used right now. The survey responses will remain confidential and can be accessed via this link: http://bit.ly/ToolkitSurvey (takes less than 200 seconds!). Those that complete the survey will get a copy of the report (which will detail the pros/cons of popular data science technology stacks).

Recently I’ve been thinking about practical data science and what the components of a good technology stack are. Wikipedia defines a technology stack as “the layers of components or services that are used to provide a software solution or application”.In Data Science this usually mean the components (software/hardware) that you use to SOURCE, STORE, CONVERT, TRANSFORM, EXPLORE, MODEL, VISUALISE and PRODUCTION MANAGE your data and generate insights.

A good technology stack helps accelerate the process to build, develop and use models to derive value. Because not all data environments or business environments are the same, a ‘good’ stack is relative to the operating context. For example, you may work with a lot of unstructured data, need to provide real-time model scores, deal with exabytes of data or work with a team that do not have deep technology skills.

If you’re involved in architecting a technology stack, it is important to optimise by recognising these characteristics.

If you preform data analysis or build models, you will know it is often quite easy to begin by trialling many different approaches (e.g. for classification problems we may trial random forests, logistic regression, neural networks and support vector machines) to determine which is most effective and hence should be used to drive actions. This trial-and-error approach means that if we choose an inappropriate method we can quickly discard it, and will not suffer a large delay to the delivery of insights.

However, when building a technology stack we can take no such approach. Implementing a technology stack takes a considerable amount of time, often ‘locking-in’ the environment when complete. If this environment is unsuitable preforming good data science (which is what will like to do most AND how the value is created) becomes increasingly difficult. Choosing a technology stack is a Very Important Thing to Get Right.

As technology stacks change infrequently, most data scientists will not have had a chance to work with a large number of technology stacks. In addition, over the last number of years there are many new components that promise to solve the problems that yesterdays tech couldn’t. For example, Just some of the components available today are displayed below.

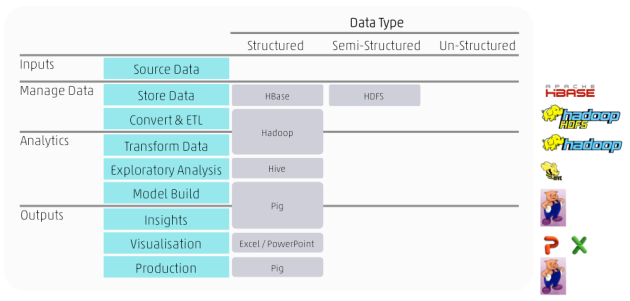

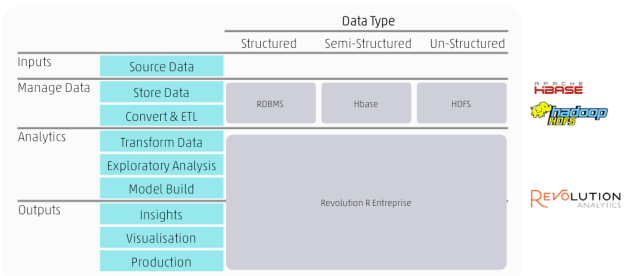

If we separate these components into their area of core competency (what they do best), we get something like this.

So, we now know that (a) choosing the right architecture is a very important component to a successful data science team and (b) most data scientists have experience with a small subset of architectures.

So how do you decide on the right architecture?

The answer usually includes the following:

- Network: Talk to other data scientists (somebody somewhere has done this research already – find them)

- Partner: Find a good system implementation partner

- Re-Use: (if appropriate) Use what you’ve used successfully in the past

- Do Not Delegate Lightly: You may be considering deferring the decision to an IT/CIO function. Unless you have a trusting relationship with your IT/CIO group, be careful choosing this option. IT may be optimising on easy-of-support or minimal-cost rather than functionality or benefits to modeling (this will cost more in the long tun).

- Conferences: Find others using a stack your considering (and discover the pros / cons)

- Finally: Don’t commit to transitioning (tuning off the old approach) until your SURE that the new way is working

Here are three simple example technology stacks:

Oracle Data Mining (simple transition solution for those beginning data science who already have Oracle ERP)

Hadoop Three-Tier Stack (a vanilla Hadoop stack – Yahoo originated this approach).

Revolution Analytics Real-Time Big Data Analysis (RTBDA) stack (good for real time eager-scoring deployment)